Nerd Corner #52

Surgical reading, GPT-3 and more!

Hi All!

After 6+ weeks of hot Florida weather, I’m back home in San Francisco. It feels good to be home but I already miss the Venezuelan food 🤤.

Here things seem to be going back to normal very slowly. The pandemic is still raging and cases are going up but there are many more people roaming around than a few months ago, at least most people seem to be following the guidelines and wearing masks.

What I am reading 📚

I started reading “Made to Stick” by Chip and Dan Heath. This is the last “marketing” book in my 2020 reading list.

With this one, I’m trying a new approach. Rather than attempting to reading it cover to cover, I’ll do a surgical reading: read the preface and epilogue, scan the table of contents, and the index writing down all the ideas and concepts that interest me. Only after this preliminary step, I’ll dive into the pages that contain the ideas that stood out to me.

The point of this type of reading is to just get an overarching view of the book building a map on an index card (I always read with one close by so I can jot down any thoughts, notes and stick it in the book) that you can always go back to when you revise the book.

—

Now, speaking of the 2020 reading list, I realized that having such a rigid list is not very good. I’m not even halfway through the list because as I read books and essays my curiosity wanders and I end up picking other books along the way. So for the remaining of the year, I’ll try to prioritize books whenever I’m out of reading material but I’ll let my curiosity drive me whenever possible, it just seems a much better and enjoyable approach.

Nerd Corner 🤓

We need to talk about GPT-3 since it’s been all over the tech news and “tech” Twitter.

GPT-3 is the latest language model released by OpenAI. It is massive (175 billion parameters) and trained on a huge dataset of web content, that makes it seem almost magical.

Soon after it was released, OpenAI also published an API to let users play with the system and that’s when everybody started going crazy and for good reasons! One user primed the model (gave it a few good examples) in such a way that it could generate functioning code for an app from a natural language description of the app’s expected behavior. Another one created a powerful search engine on top of GPT-3 in just a few minutes (compared this to the time it took Google to get there). There are lots of other examples compiled in this Twitter thread.

While it might seem just a brute-force approach to AI (175 billion parameters is a huuuuge number, you need a data center to run it) OpenAI is leading the way in showing us what these AI models are and will be capable to do in the very near future. I’m sure that in 5-10 years models like this will revolutionize—once again—how we work, do research, and innovate.

To understand more about what exactly GPT-3 is I recommend these videos:

Computerphile on GPT-3. This is a nice and somewhat short introduction.

GPT-3 Paper explanation. This is an in-depth explanation of the research behind GPT-3.

Cool Finds 🤯

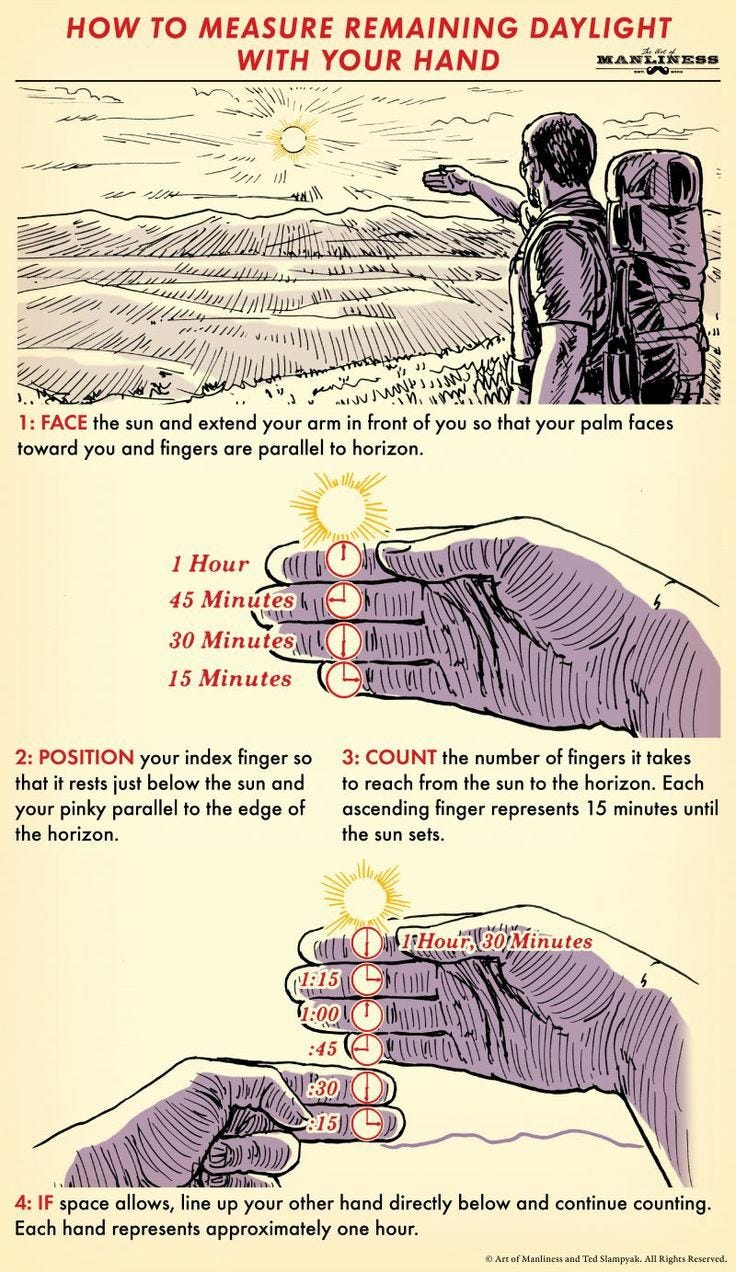

Survival is my latest discovery on Twitter. The account publishes all kinds of survival videos and guides like this graphic that shows how to tell how many hours of daylight there are left in the day!

Remember Blockbuster? Here’s a time-lapse of its boom-and-bust cycle.

Amazon turned 25 years old last week. Here’s a video of Jeff Bezos talking about Amazon’s focus on customer experience, ease of use, and low prices. Whatever you think about the company, there’s no doubt that a big part of its success is due to long-term thinking and customer obsession.